Moon Buddy, Mission Control and Math

CMU Researchers Develop Communication Strategies To Support Space Exploration

Susie Cribbs (DC 2000, 2006)

Everyone's heard the old adage that the only way to get to Carnegie Hall is "practice, practice, practice." But how does Carnegie Mellon get to the Moon (and beyond)? Communications, communications, communications.

Right now, students and faculty members are creating new ways for astronauts to connect with one another in space, for people on Earth to stay in touch with rovers and robots off planet, and for securing data as we move into a new era of space exploration.

MoonBuddy Makes a Difference in Future Moon Missions

Angelica Bonilla Fominaya, a senior studying computer science and art

As a kid, Angelica Bonilla Fominaya dreamed of working for NASA. But as someone interested in arts and technology — not straight up engineering or physics — she didn't think it was possible. Then an internship at NASA her junior year in CMU's Bachelor of Computer Science and Arts program helped change her mind. And it led to changes in how astronauts communicate in space.

While a NASA intern, Bonilla Fominaya learned about the agency's Spacesuit User Interface Technologies for Students (SUITS) Design Challenge, which tasked teams of college students with designing and creating spacesuit information displays within augmented reality (AR) environments that could be adapted for use in upcoming Artemis missions to the Moon. Bonilla Fominaya jumped at the opportunity to gather a team and get involved.

“A couple of my friends are really interested in user interfaces or virtual reality, and we thought it would be fun to try," said Bonilla Fominaya, who served as CMU's team lead. "We spent the fall 2021 semester writing a proposal with the help of Human-Computer Interaction Institute (HCII) faculty member David Lindlbauer. We submitted the proposal and it got accepted by NASA and we were like, ’OK, I guess we have to do this now. ’”

David Lindlbauer, faculty member in the HCII

And do it they did. The team created MoonBuddy, an AR interface that will help astronauts navigate the lunar surface by plotting routes and avoiding obstacles, assist with scientific experiments and provide critical support during emergencies.

The team's technology incorporates voice control into the AR interface. With MoonBuddy, an astronaut could ask for their location or the location of crew members and see that information layered on a heads-up display. During sample collection, an astronaut could ask MoonBuddy to record a rock in their hand and show data from a visual analysis on the display. In the event of an emergency, MoonBuddy could share crew member vitals and locations.

“NASA doesn't yet have a system that puts all this information in one place where it is easily accessible by astronauts. Augmented reality of this type hasn't existed in space travel before,” Bonilla Fominaya said. “We're developing a novel interface that could actually be used in space exploration.”

The CMU team — comprised of students studying augmented and virtual reality, human-computer interaction, information systems, cognitive science, computer science, and art — worked with NASA staff throughout the project. The project culminated in a trip to NASA's Johnson Space Center in Houston this past spring to test MoonBuddy with NASA assets and people.

The team essentially walked around the space center's Rock Yard — a multi-acre test site that simulates lunar and Martian terrain — with a NASA engineer, giving them instructions for using their technology.

It went well. Eventually.

“The first day we were there, we got absolutely demolished, because we had a lot of issues figuring out how to work as a team to make it go smoothly,” Bonilla Fominaya said.

Frazzled and stressed — and maybe a little intimidated by going behind the veil at the space center — the team struggled with how to give their user appropriate instructions for using the interface. Day one feedback? Work better as a team.

Students on the MoonBuddy Team: (Top Row) Rong Kang Chew, Alexandra Slabakis, Joyce (Yunyi) Zhang, Matthew Komar (Bottom Row) Anita (Ningjing) Sun, Angelica Bonilla Fominaya, Jeremia Lo

“I do think that the things we came up with in the challenge help build the foundation for the types of ideas that NASA will have when they‘re developing the interfaces for the new space suits.”

Angelica Bonilla Fominaya and a NASA engineer test the MoonBuddy interface at the JSC Rockyard during test week.

“So we had to refactor,” Bonilla Fominaya said. Luckily, the testing schedule gave teams a day between initial testing and their final presentation to work out kinks and bugs. The MoonBuddy team hunkered down and got to work. “We decided to write a good script,” Bonilla Fominaya said. “We decided to be calm and collected when we were presenting what we had. Because we had a good interface. Everything worked.” So they wrote clear testing instructions and a better script for their presentation, which paid off.

“By the end of it, we had something that worked really well and was really effective,” Bonilla Fominaya said.

SUITS has officially ended, but MoonBuddy’s work continues. With NASA's blessing, the team wrote a paper that was accepted to the ACM Symposium on User Interface Software and Technology (UIST 2022). And while there's no guarantee their technology will land on the Moon with the upcoming Artemis missions, Bonilla Fominaya remains optimistic that their work will make a difference.

“I do think that the things we came up with in the challenge help build the foundation for the types of ideas that NASA will have when they‘re developing the interfaces for the new space suits,” she said. “Maybe our interfaces won't be directly used in these missions, but if we find out that they use something similar to our work, we'll know that we helped this go further and that our ideas were the foundation for something bigger and better.”

Importantly for Bonilla Fominaya, it made her realize she could achieve her childhood dream.

“It was such an educational experience in teaching us that you don't have to be a rocket scientist to work at NASA,” she said. “You can do things at NASA as artists, designers and engineers working on user-interface design. There are real jobs at NASA for this kind of stuff.”

“It ended up definitely being the most difficult and amazing experience I've had in my college career.”

Mission Control: Reach Out and Touch Space

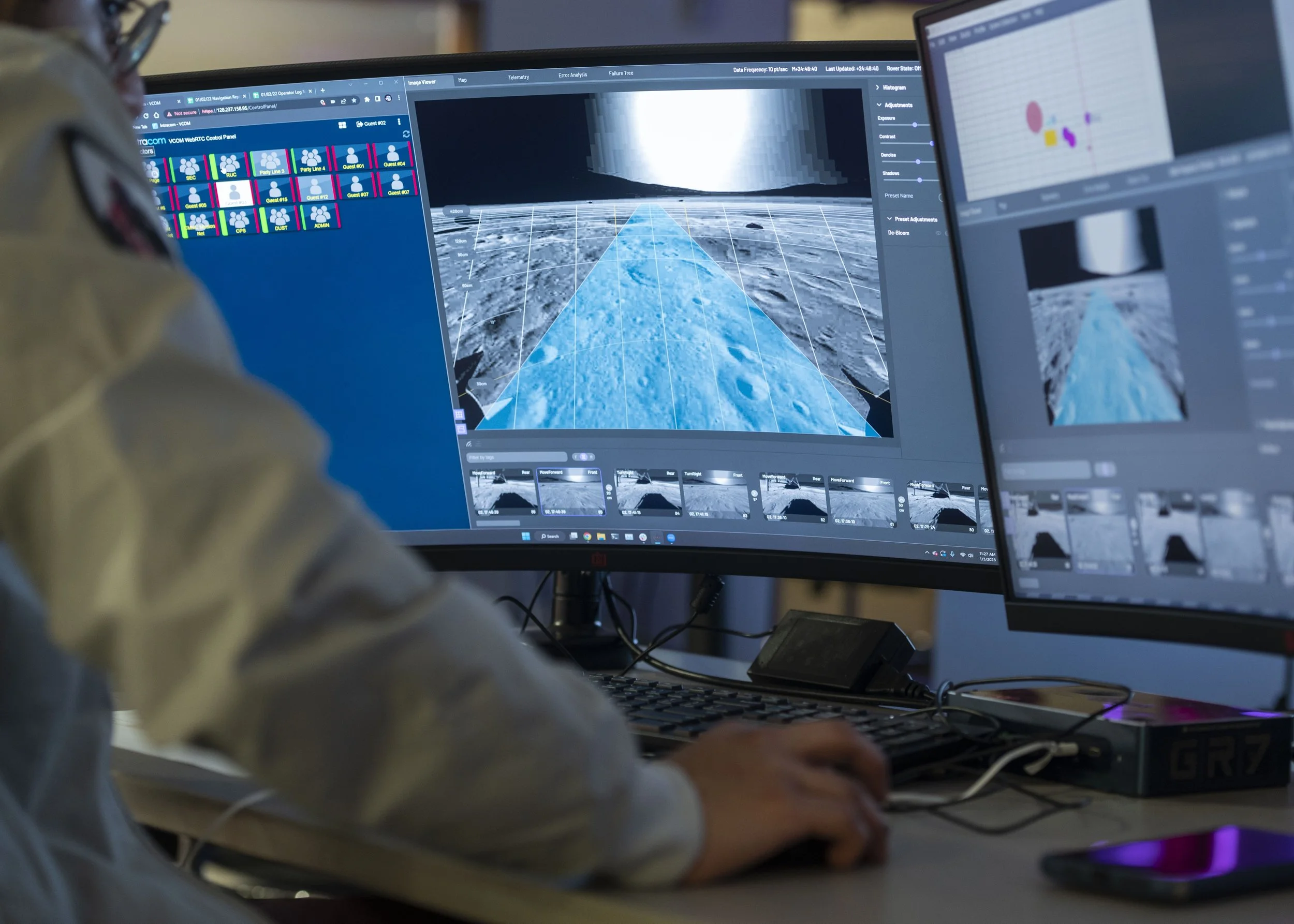

Not long ago, Gates 2109 looked like any other conference room on campus: full of furniture and white boards, ready for meetings, classes or whatever came its way. Now, it's Carnegie Mellon Mission Control, a new command hub in the Gates Center for Computer Science that provides state-of-the-art equipment for crews of the Iris and MoonRanger missions, and future space robotics initiatives.

Designed with modularity in mind, Mission Control sits above the High Bay area — a hotbed of robotics research and development on campus — with giant windows overlooking a sand pit that the Iris team will use to mimic lunar conditions during its mission. Three giant flatscreen displays line the opposite wall, making it easy for everyone in the room to monitor the mission's progress. Just outside the room's main entry is a small space where visitors can sit and observe the action through a large window.

The Iris team at their stations, running a simulated test at Mission Control in the Gates Center for Computer Science.

Hexagonal acoustic tiles line the walls, providing both sound-dampening practicality and a little shout out to the Iris project.

“The hexagonal pattern is reminiscent of the molecular structure of carbon fiber — one of the reasons Iris will be able to get to space, as it allowed us to construct a durable chassis and wheels that weigh very little,” said Iris Missions Operations Leader Nikolai Stefanov, a senior majoring in physics. “That's basically a carbon ring right there,” he said, pointing to the paneling.

Within the control center, operator workstations give crew members space to plan and direct the movements of the rovers, monitor their data and images and communicate with them and each other. Crew members will also see telemetry, localization data and Fault List Evaluator for Ultimate Response (FLEUR) readouts at the workstations.

A flight director will oversee all the action from the back of the room — against the windows looking into the High Bay —perfectly positioned to constantly see what's happening.

“From a team perspective, it makes the project feel very real. It’s happening now. Having this dedicated space cements it as permanent. It isn’t just a one-off class or assignment. It’s something that’s here to stay. It becomes very much a gateway into space.”

The simulated view of the Iris rover.

Mission Control also contains a separate data room, with servers powering the main control room and a VPN connection to the landing companies that will relay communications from CMU to the lunar rovers. In the case of Iris, that's Astrobotic; for MoonRanger, NASA's Commercial Lunar Payload Services program.

William “Red” Whittaker, who heads the university’s Field Robotics Center and plays a huge role in upcoming lunar initiatives, says this space is vital to mission success.

“There’s the sense that technology makes or breaks the mission. And, of course, it matters to get that right. But it pales in comparison to all the eventualities and contingencies that occur during the mission,” Whittaker said. “If something goes wrong, you have such a short time to address it, patch it and get into action. The only way to do that is to have your team co-located and you need a great facility that technically works in such a way that communications are impeccable and everybody has the same information.”

Carnegie Mellon Mission Control also represents an important milestone for students working on space robotics — and who may have been dreaming about space exploration their entire lives.

“From a team perspective, it makes the project feel very real,” Stefanov said. “It’s happening now. Having this dedicated space cements it as permanent. It isn't just a one-off class or assignment. It’s something that's here to stay. It becomes very much a gateway into space.

“Kids dreamed of becoming astronauts one day,“ he continued. “Well, this is a way you get to touch space like never before."

Raewyn Duvall (ECE 2019) Research Associate in the Robotics Institute and Commander of the Iris Mission.

CMU, JPL Work to Ensure One Plus One Always Equals Two

Like Angelica and Nikolai, Rashmi Vinayak always found herself fascinated by space. But her research in coding theory never crossed paths with space exploration. Until now. Vinayak, an assistant professor in the Computer Science Department, studies resource-efficient ways to make computer systems more robust and resilient. Her work has largely focused on how to accomplish this in data storage systems, or data center applications. But a fortuitous meeting led to its possible application in space.

Rashmi Vinayak, assistant professor in the Computer Science Department

“I was describing to some folks from Google that we were looking into protecting computation using coding theory tools. And it turned out that a partner of one of the Googlers works at NASA's Jet Propulsion Laboratory,” Vinayak said. “We got in touch and started having regular meetings."

Vinayak and JPL are trying to minimize computation errors in space caused by cosmic radiation. The problem isn’t a new one. As altitude increases, cosmic radiation — the high-energy charged particles produced in space — interacts with the silicon used to perform computation and generates errors, known as soft errors, transient errors or bit flips (all different names for the same thing). Vinayak gives the example of sending a simple computation like one plus one.

“Most of the time, you get two,” she said. “But sometimes you don't. How can we do reliable computation on such an erroneous substrate?”

To eliminate the likelihood of the “not two” answer, computing systems in space (or in national labs sitting at high altitudes or even air traffic control systems) have two options: performing the computation multiple times and taking the majority answer — a sort of computational “best out of three” — or doing what is called radiation hardening, encasing the actual hardware in materials that protect it from radiation. The former is expensive and data intensive. The latter is expensive and bulky. Neither is a good option for computation in space, as more and more accelerators such as graphic processing units are becoming popular in the field for machine learning tasks.

“It’s the visceral excitement of space. It’s pushing the frontier of human knowledge. There’s so much unknown about outer space, and getting knowledge about space can help us know more about ourselves and how the universe came into being. I’m super excited about this project.”

Enter Vinayak's coding theory solution

“Coding theory revolutionized digital communication decades ago by enabling us to add redundancy in a resource-efficient manner that does not require replication that could increase overhead by 200% or 300%. That's too much in any system,” she said. “Coding theory enables us to add a fractional amount of overhead, for example, 10% or 20%, but still provide the same amount or even more fault tolerance than one can do by replication.”

It’s all incredibly math-dense, but Vinayak gives an overly simplified example from data storage to illustrate using integers A and B. One way to protect them is to store multiple copies — A, A and B, B — on different devices, so if one fails there’s still another copy. With two copies of the data, you need twice the resources to store it.

“Instead, think about this other approach,” she said. “I have A and B, but instead of having two copies of them, I store A, B and A+B. These are all stored on different devices. Let's say A is lost. Now I have B and A+B, so I can get A back with only 50% more overhead.”

In technical terms, A+B would be known as a parity, a mathematical function of the original data that helps protect it. And while her example uses data storage, it's also applicable to computation in a more nuanced way.

“You perform your original computation on the original data units, as well as on this function of the original data. Then the output of the latter can help you recover any of the original computation if it's lost or detect if there's an error,“ Vinayak said.

While still in its nascent stages, the JPL collaboration could influence how calculations are performed in future space missions and allow more of those calculations to happen reliably in space instead of sending all the data back to Earth. Right now, JPL is testing Vinayak's coding theory approach under different radiation conditions in the lab to see how it responds.

Vinayak can't hide her joy at being involved in space research.“Even in childhood, I was fascinated by space,” she said with a grin. “It's the visceral excitement of space. It‘s pushing the frontier of human knowledge. There’s so much unknown about outer space and getting knowledge about space can help us know more about ourselves and how the universe came into being. I'm super excited about this project.” ■